Use Python get overseas epidemic data

Demo

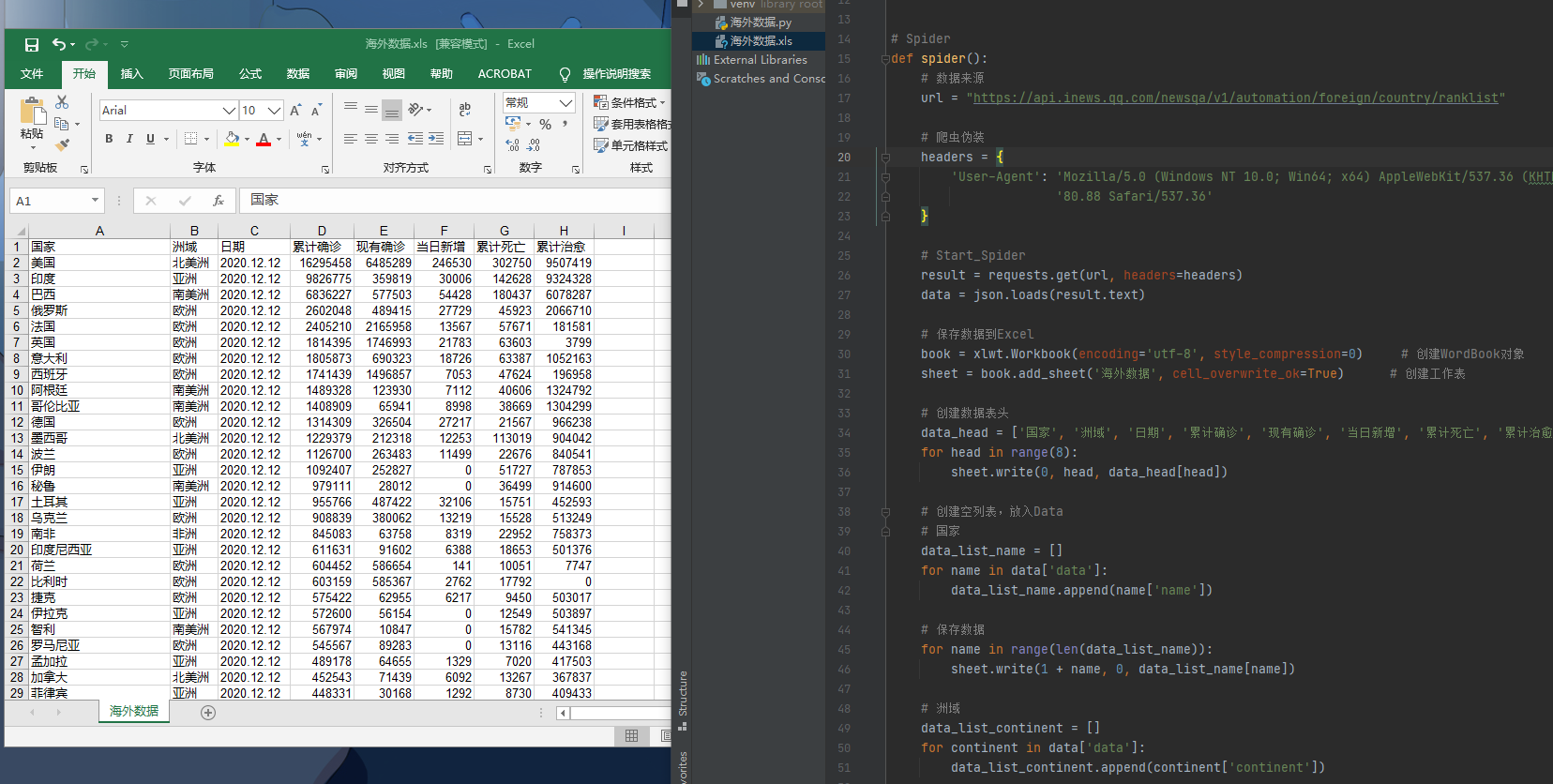

Explain

Use Tencent's database to obtain global epidemic data. And save it in Excel.

Code

# -*- coding:utf-8 -*-

# @Author: Nuanxinqing

# @Time: 2020/12/12 17:35

# @File: 海外数据.py

# @Software: PyCharm

# 模块

import requests

import json

import xlwt

import time

# Spider

def spider():

# 数据来源

url = "https://api.inews.qq.com/newsqa/v1/automation/foreign/country/ranklist"

# 爬虫伪装

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/87.0.42'

'80.88 Safari/537.36'

}

# Start_Spider

result = requests.get(url, headers=headers)

data = json.loads(result.text)

# 保存数据到Excel

book = xlwt.Workbook(encoding='utf-8', style_compression=0) # 创建WordBook对象

sheet = book.add_sheet('海外数据', cell_overwrite_ok=True) # 创建工作表

# 创建数据表头

data_head = ['国家', '洲域', '日期', '累计确诊', '现有确诊', '当日新增', '累计死亡', '累计治愈']

for head in range(8):

sheet.write(0, head, data_head[head])

# 创建空列表,放入Data

# 国家

data_list_name = []

for name in data['data']:

data_list_name.append(name['name'])

# 保存数据

for name in range(len(data_list_name)):

sheet.write(1 + name, 0, data_list_name[name])

# 洲域

data_list_continent = []

for continent in data['data']:

data_list_continent.append(continent['continent'])

for continent in range(len(data_list_continent)):

sheet.write(1 + continent, 1, data_list_continent[continent])

# 日期

year_time = time.strftime("%Y", time.localtime())

data_list_date = []

for date in data['data']:

data_list_date.append(date['date'])

for date in range(len(data_list_date)):

sheet.write(1 + date, 2, year_time + '.' + data_list_date[date])

# 累计确诊

data_list_confirm = []

for confirm in data['data']:

data_list_confirm.append(confirm['confirm'])

for confirm in range(len(data_list_confirm)):

sheet.write(1 + confirm, 3, data_list_confirm[confirm])

# 现有确诊

data_list_nowConfirm = []

for nowConfirm in data['data']:

data_list_nowConfirm.append(nowConfirm['nowConfirm'])

for nowConfirm in range(len(data_list_nowConfirm)):

sheet.write(1 + nowConfirm, 4, data_list_nowConfirm[nowConfirm])

# 当日新增

data_list_confirmAdd = []

for confirmAdd in data['data']:

data_list_confirmAdd.append(confirmAdd['confirmAdd'])

for confirmAdd in range(len(data_list_confirmAdd)):

sheet.write(1 + confirmAdd, 5, data_list_confirmAdd[confirmAdd])

# 累计死亡

data_list_dead = []

for dead in data['data']:

data_list_dead.append(dead['dead'])

for dead in range(len(data_list_dead)):

sheet.write(1 + dead, 6, data_list_dead[dead])

# 累计治愈

data_list_heal = []

for heal in data['data']:

data_list_heal.append(heal['heal'])

for heal in range(len(data_list_heal)):

sheet.write(1 + heal, 7, data_list_heal[heal])

# 保存数据表

book.save('海外数据.xls')

# Start

if __name__ == '__main__':

spider()

The End

The project is not over yet. I will learn about data visualization later. Let's wait a little bit.

阅读剩余

版权声明:

作者:Nuanxinqing

链接:https://6b7.org/83.html

文章版权归作者所有,未经允许请勿转载。

THE END